Latest posts

The data life cycle is a framework that outlines the stages that data goes through from its initial creation or capture to its eventual deletion or archival. Here are the typical steps in the data life...

The languages of Data Science For anyone just getting started on their data science journey, the range of technical options can be overwhelming. There is a dizzying amount of choice when it comes to programming...

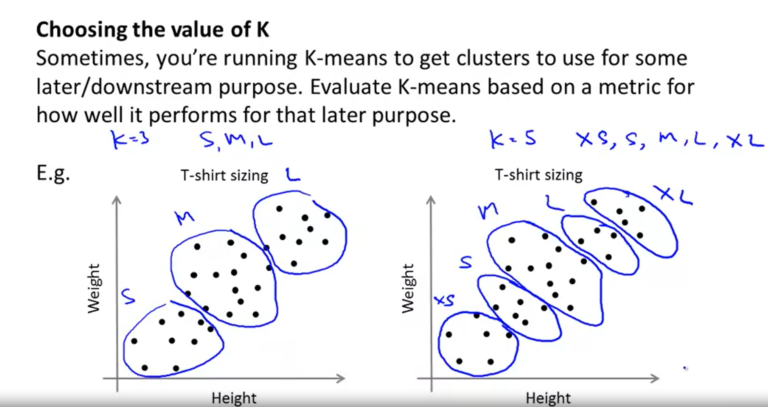

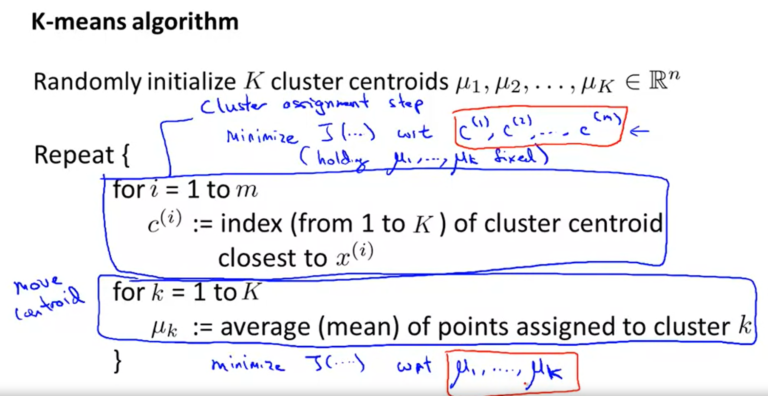

Let’s understand some of the factors that can impact the final clusters that you obtain from the K-means algorithm. This would also give you an idea about the issues that you must keep in mind before you...

Let’s go through the K-Means algorithm using a very simple example. Let’s consider a set of 10 points on a plane and try to group these points into, say, 2 clusters. So let’s see how the K-Means algorithm...

No posts found